What tokenization is – and what it’s not

by Jakob Bosshar Head of Business Operations @ 21X

Part 1

Tokenization is no longer an abstract vision, but a concrete infrastructure development that is actively shaping real markets.¹ While the term has long been used in a vague manner, a more precise understanding is increasingly establishing itself: tokenization is a technical and regulatory framework that transforms existing and entirely new forms of assets and contractual structures into digital, programmable units. ¹ ² Properly understood, this is not a radical upheaval of the financial system, but rather a pragmatic modernization; it is a logical evolution of market infrastructure toward natively digital, executable market objects.¹ ⁴

At its core, tokenization means that an asset or right – whether it is a traditional security, a claim, a contractual agreement, or a newly created financial instrument – is not only digitally represented but also becomes technically executable within its registry logic. Ownership rights, cash flows, contractual obligations, transfer restrictions, and compliance requirements are embedded in smart contracts that not only store information but also execute processes automatically.¹ ²

Citi aptly describes this development as “a natural evolution of financial markets, in which assets become native digital objects with executable logic.” ¹ This transition scenario opens up gains in efficiency, transparency, and speed that are difficult to achieve within today’s fragmented post-trade infrastructure.¹ ²

Tokenization is thus more than just digital representation:

An electronic security in the CSD book is digital but not tokenized. Tokenization requires:

- A DLT or blockchain ledger as a legally relevant register,

- Smart contracts that implement rights, obligations, and transfer rules,

- Programmable execution of issuance, transfer, lifecycle events, and settlement.¹ ²

Properly understood, tokenization is not a disruption, but an infrastructural advancement a logical step in the evolution from paper to electronic registers to programmable registers.¹ ⁴

What the token does not change

Economically, it is crucial that the token does not change the substance of the asset. Deloitte emphasizes that tokenization does not create a new asset, but rather a technical container for existing rights.²

In the European legal area, the following applies

- A tokenized financial instrument remains a financial instrument within the meaning of MiFID II.

- It continues to be subject to CSDR, the Prospectus Regulation and, where applicable, the EU DLT pilot regime (Regulation (EU) 2022/858). ⁵

- Tokenization does not change the risk profile or issuer liability, it only transforms the registration and settlement logic.

Outside the EU framework, modern legal frameworks confirm the same basic logic. For example

- Liechtenstein (Token and VT Service Provider Act, TVTG) explicitly defines tokens as representations of existing rights, not as independent assets.⁶

- Switzerland (DLT Act, in particular the revised Book-Entry Securities Act) introduces “registered securities,” which are economically identical to traditional securities but are technically managed via a DLT register.⁷

- United States does not yet have a unified federal tokenization regime comparable to the EU DLT Pilot Regime, but regulatory progress has accelerated. In 2025, the Guiding and Establishing National Innovation for US Stablecoins Act (GENIUS Act) was signed into law, establishing the first federal regulatory framework for USD-backed payment stablecoins and clarifying oversight responsibilities between federal regulators.⁴

These international examples show that a global consensus is emerging

Tokenization is a technical innovation in register technology, not a redefinition of the financial instrument. M. Cremers consistently argues that this is precisely why tokenization is interesting for banks: as it operates within the regulatory logic, not outside it.³

What tokenization is not

Several common misconceptions should be explicitly addressed.

- Tokenization is not a data security measure.

Tokenization in capital markets should not be confused with data security tokenization. While payment tokenization replaces sensitive data with placeholders, capital market tokenization implements the legally binding register itself on DLT, making the token a functional component of ownership and transfer rather than a data substitute.

- Tokenization does not generate liquidity.

Liquidity is created by market participants, not by technical containers. Tokenization can facilitate trading, but it cannot generate demand. ¹ ²

- Fractionalization is not an end in itself.

Dividing assets into small shares only creates value if the legal structure and market infrastructure are viable. Roland Berger emphasizes that fractionalized Real-World-Assets (RWAs) provide little value without functioning secondary markets.⁴

- Tokenization is not a regulatory shortcut.

It does not simplify regulation, it implements it – e.g., holding periods, investor categories, or transfer restrictions as smart contract logic.²

Conclusion

Tokenisation is not a new asset class, but rather an infrastructural advancement that enables the programmable execution of existing rights and processes at the registry level without changing their legal or economic substance. European regulatory frameworks provide a robust basis for institutional application.

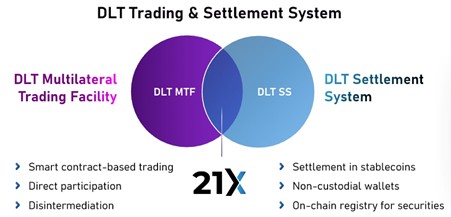

The crucial step now is to move from definition to implementation. Regulated market infrastructures such as 21X are already demonstrating how tokenised instruments can be traded and settled within the existing European legal framework.

Institutional market participants should test tokenisation in regulated market environments in order to gain operational experience and make informed strategic infrastructure decisions.

In part 2 of our look at tokenization, we shift the focus from conceptual differentiation to practical implementation. We examine how DLT and smart contracts are being applied in reality, where institutional adaptation is already visible, and what framework conditions are shaping the next scaling phase. The focus is on progress, practical experience and the paths through which tokenisation is gradually being integrated into the capital market infrastructure.

Make sure you catch the second instalment – and other news and articles – by following 21X.

References

¹ Citi GPS (2023). Money, Tokens and Games.

² Deloitte (2019). The tokenisation of assets is disrupting the financial industry.

³ Cremers, M. (2024). Tokenisation and the Banking System.

⁴ Roland Berger (2023). RWA Tokenisation Market Outlook 2030.

⁵ European Commission (2022). Regulation (EU) 2022/858 — DLT Pilot Regime.

⁶ Liechtenstein: Token and VT Service Provider Act (TVTG), LGBl. 2019 No. 301.

⁷ Switzerland: Federal Act on the Adaptation of Federal Law to Developments in Distributed Ledger Technology (DLT Act), in force since 2021; in particular amendments to the Book-Entry Securities Act (BEG).